PDF Chatbot using RAG

A comprehensive AI-powered chatbot that leverages Retrieval-Augmented Generation (RAG) to answer questions based on the content of uploaded PDF documents.

A comprehensive AI-powered chatbot that leverages Retrieval-Augmented Generation (RAG) to answer questions based on the content of uploaded PDF documents.

Introduction

The project aims to build a comprehensive AI-powered chatbot that leverages Retrieval-Augmented Generation (RAG) to answer questions based on the content of uploaded PDF documents. The chatbot is designed to be user-friendly and intuitive, allowing users to interact with it in a natural and conversational manner. The chatbot is capable of understanding and responding to a wide range of questions, making it a valuable tool for users seeking information from PDF documents. The chatbot is powered by a combination of state-of-the-art AI models, including OpenAI models (GPT-3.5 turbo, GPT-4 turbo, and GPT-4o), FAISS, and a vector store, which work together to provide accurate and relevant answers to user queries.

You can find the chatbot at this link. This is a Hugging Face space, so you can interact with the chatbot directly from your browser.

Application Architecture

Overview

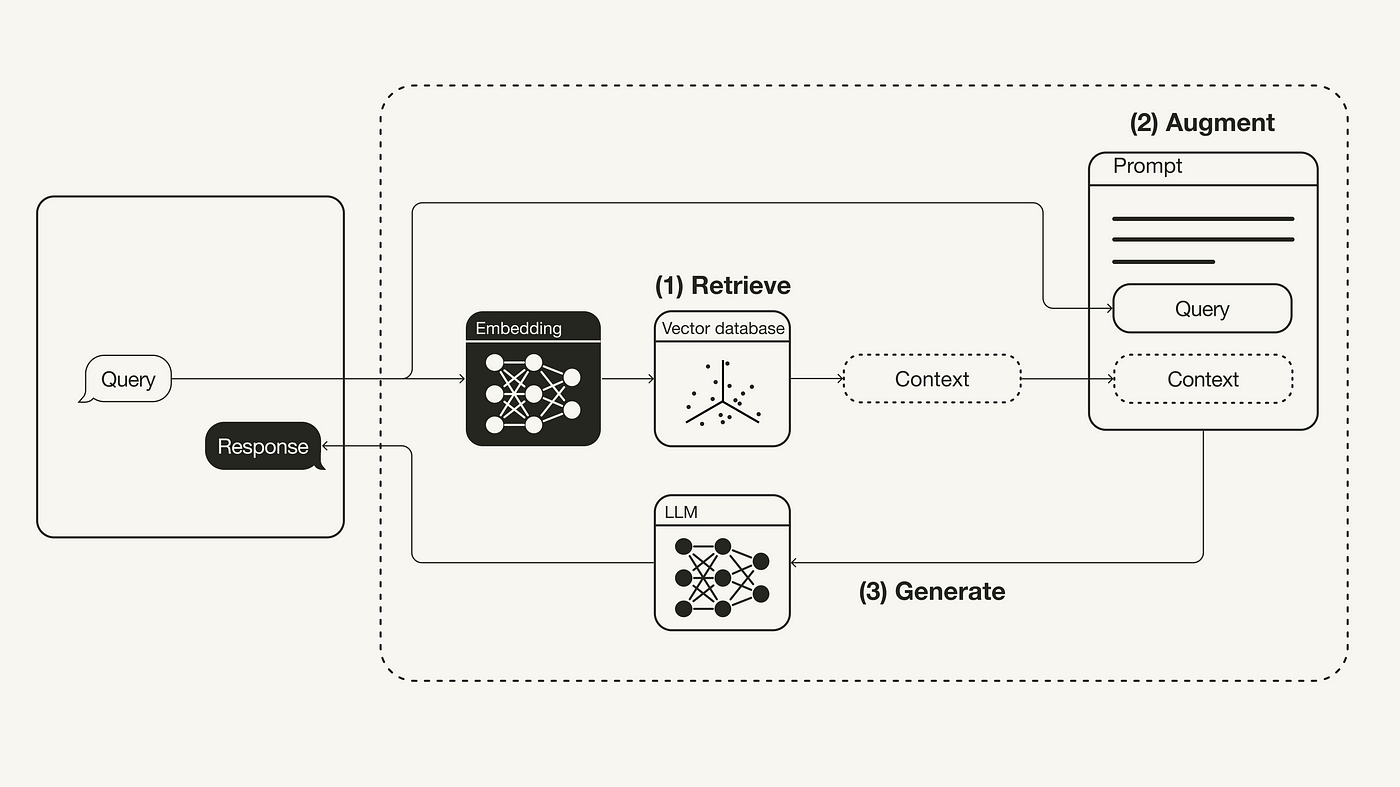

The application architecture is designed to process PDF documents, extract and encode their content into vectors, store these vectors in a searchable index, and use these vectors to provide contextually relevant answers to user queries. The main components of the architecture are the Query, Retriever, Vector Store (FAISS), Generator, and Answer.

Here is a high-level image overview of the application architecture:

Components

Goal: Handle user interactions and preprocess user queries.

Function: When a user asks a question, the query component processes the input, including encoding the question into a vector format suitable for searching in the vector store. This step is crucial for transforming the user’s natural language question into a form that can be matched against the document contents.

python code:

1

2

def encode_query(query, model):

return model.encode([query])

This function uses a pre-trained Sentence Transformer model to encode the user’s query into a vector representation. The encoded query vector will be used by the retriever component to find relevant information from the document.

Goal: Identify relevant information from the document that can help answer the user’s query.

Function: The retriever component searches through the vector store (FAISS) to find the segments of the document that are most relevant to the user’s question. This involves comparing the encoded question vector with the stored document vectors to find the closest matches. The retrieved segments provide context and relevant information that will aid in generating an accurate answer.

python code:

1

2

3

4

5

6

7

8

9

def query_vector_store(query, vector_store, k=5):

model = vector_store["model"]

index = vector_store["index"]

texts = vector_store["texts"]

query_embedding = model.encode([query])

distances, indices = index.search(query_embedding, k)

results = [texts[i] for i in indices[0]]

return results

This function queries the vector store using the encoded query vector and retrieves the top k closest segments from the document. These segments contain relevant information that can be used to generate an answer to the user’s question.

Goal: Efficiently store and retrieve document vectors.

Function: The vector store, implemented using FAISS (Facebook AI Similarity Search), is a specialized database for storing high-dimensional vectors. When a PDF is uploaded, its contents are segmented and each segment is encoded into a vector. These vectors are then stored in FAISS, allowing for fast similarity searches when queries are processed. FAISS is optimized for high-speed retrieval, making it ideal for this application.

python code:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

from sentence_transformers import SentenceTransformer

import faiss

def initialize_vector_store():

model = SentenceTransformer('paraphrase-MiniLM-L6-v2')

dimension = model.get_sentence_embedding_dimension()

index = faiss.IndexFlatL2(dimension)

return {"model": model, "index": index, "texts": []}

def add_pdf_to_vector_store(pdf_text, vector_store):

model = vector_store["model"]

index = vector_store["index"]

texts = vector_store["texts"]

sentences = pdf_text.split('. ')

embeddings = model.encode(sentences)

index.add(embeddings)

texts.extend(sentences)

The

initialize_vector_storefunction creates an empty vector store with a pre-trained Sentence Transformer model and an empty FAISS index. Theadd_pdf_to_vector_storefunction takes the text content of a PDF document, encodes it into vectors using the model, and adds these vectors to the FAISS index. The text segments are also stored for reference when retrieving relevant information.

Goal: Generate a natural language response to the user’s query.

Function: The generator component uses advanced language models (such as GPT-3.5 turbo, GPT-4 turbo, or GPT-4o) to formulate a coherent and contextually appropriate response based on the retrieved information. This involves combining the user’s query with the relevant document segments and generating a fluent answer that directly addresses the user’s question.

python code:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

from openai import OpenAI

client = OpenAI(api_key=st.secrets["GPTKEY"])

def get_chatgpt_response(messages, user_input, model, vector_store):

chat_history = [{"role": message["role"], "content": message["content"]} for message in messages]

chat_history.append({"role": "user", "content": user_input})

# Check if vector_store has vectors

if vector_store and vector_store["index"].ntotal > 0:

relevant_texts = query_vector_store(user_input, vector_store)

relevant_text = " ".join(relevant_texts)

else:

relevant_text = ""

if relevant_text:

context = f"Here is some relevant information from the PDF to help you answer: {relevant_text}"

chat_history.append({"role": "system", "content": context})

response = client.chat.completions.create(

model=model,

messages=chat_history

)

return response.choices[0].message.content.strip()

This function uses the OpenAI API to generate a response to the user’s query based on the chat history (including the user’s query and relevant document segments). The response is formulated by the language model (e.g., GPT-3.5 turbo, GPT-4 turbo, or GPT-4o) and returned to the user for display.

Goal: Deliver the generated response back to the user.

Function: The answer component formats the generated response and displays it to the user in the chat interface. This ensures that the user receives a clear and concise answer to their query, enhanced by the context provided by the document.

python code:

1

2

3

4

5

6

7

8

9

import streamlit as st

def display_chat(messages):

"""Display the chat messages in the Streamlit app."""

for i, message in enumerate(messages):

if message["role"] == "user":

st.markdown(f"**You:** {message['content']}")

else:

st.markdown(f"**Bot:** {message['content']}")

This function uses Streamlit to display the chat messages in the user interface. It formats the messages based on the role of the sender (user or bot) and presents them in a conversational format for easy reading.

Example Usage Scenario

Let’s walk through an example usage scenario to illustrate how the chatbot works. I will upload a PDF document containing information about a story created by me, and then ask the chatbot a question related to the story. The chatbot will retrieve relevant information from the document and provide an answer based on that context.

Here is the PDF document containing the story information:

Download PDF

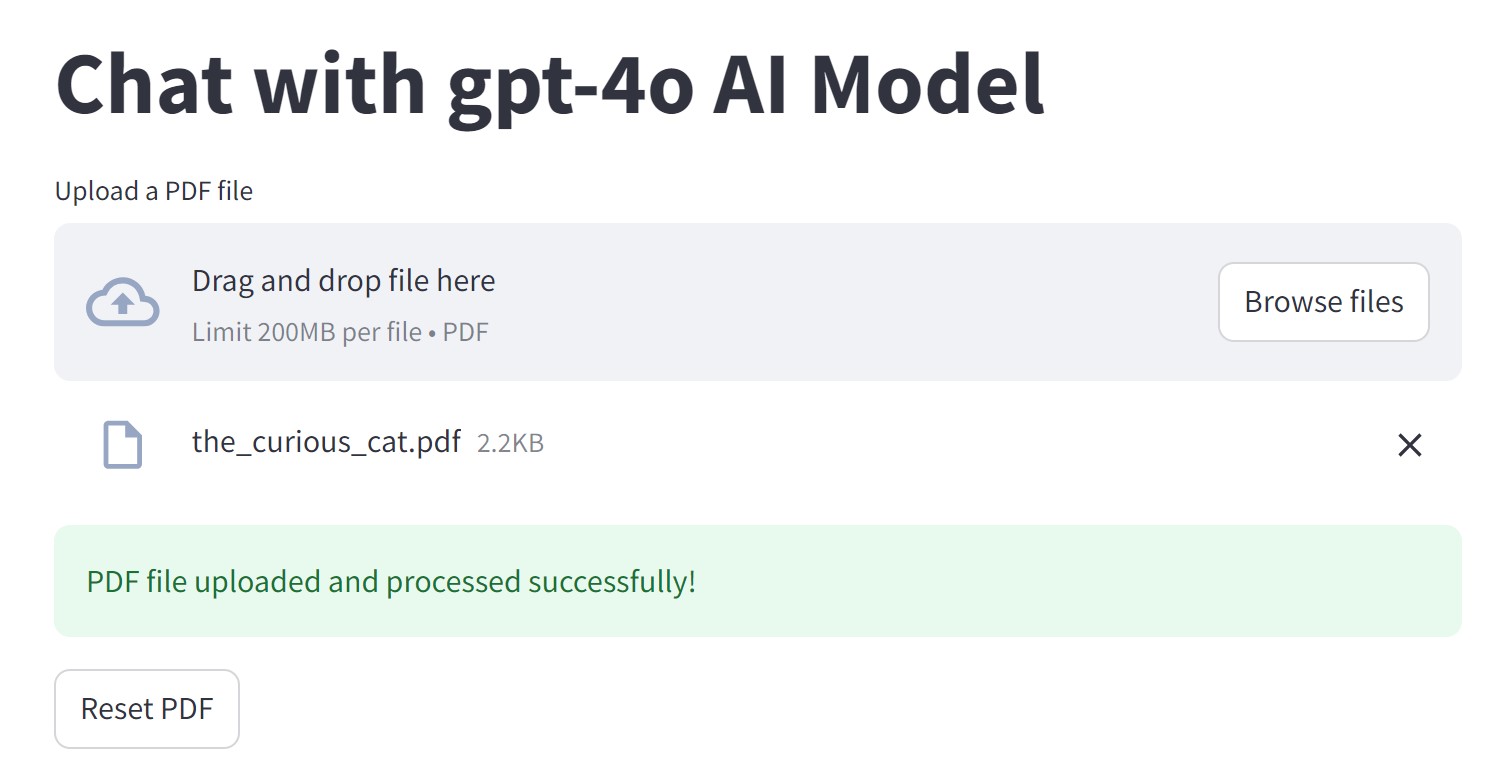

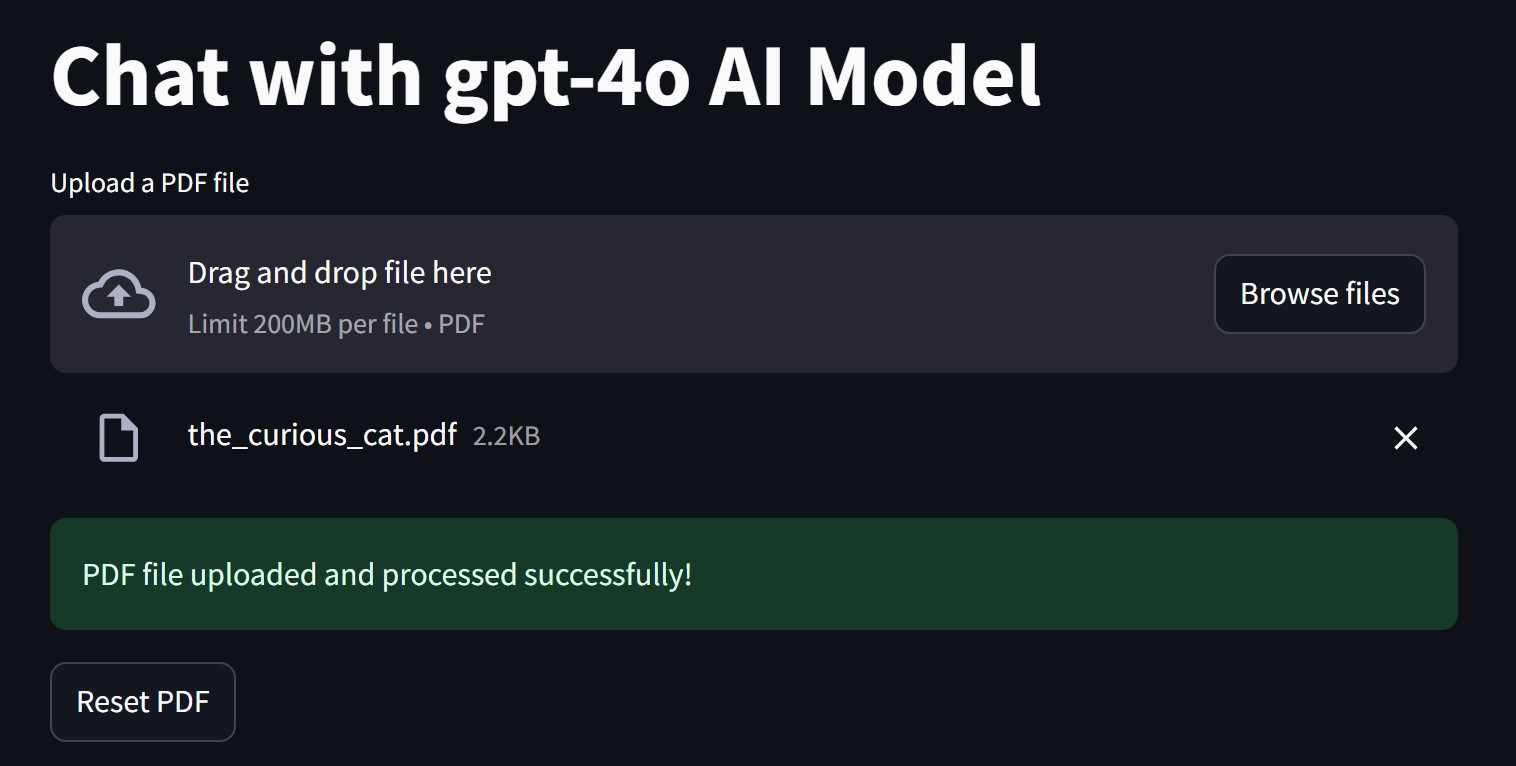

Step 1: Upload PDF Document

I will upload the PDF document containing the story information. To upload your own PDF document, you can go to the chat section of the chatbot and click on the Upload PDF button. After uploading the document, the chatbot will process the content and store it in the vector store.

Step 2: Ask questions

Here is a list of questions I will ask the chatbot:

- What is the name of the main character in the story?

- What did Minou find under the old oak tree in the garden?

- How did Minou help the firefly, and what was the firefly’s reward for Minou?

- What are some of the magical elements Minou encountered in the enchanted forest?

- Who are the three animals Minou met in the enchanted forest, and what did each teach him?

- How did Minou feel about his adventure in the enchanted forest when he returned home?

Conversation with the AI

Click to expand the conversation with the AI

You:

What’s the main character’s name?

AI:

The main character’s name in the story is Minou.

You:

What did Minou find under the old oak tree in the garden?

AI:

Minou found a small, sparkling light under the old oak tree in the garden.

You:

How did Minou help the firefly, and what was the firefly’s reward for Minou?

AI:

Minou helped the firefly by freeing it from a spider’s web. In gratitude, the firefly promised to take Minou to the heart of the enchanted forest where he would find unimaginable wonders. Curious and excited, Minou followed the firefly through the garden and beyond, embarking on an adventure into the forest.

You:

What are some of the magical elements Minou encountered in the enchanted forest?

AI:

In the enchanted forest, Minou encountered numerous magical elements:

- Whispering Trees: The trees in the forest whispered ancient secrets, sharing wisdom and tales from long ago.

- Singing Flowers: Beautiful flowers sang enchanting melodies, creating an ethereal atmosphere.

- Talking Animals: The animals in the forest spoke a mysterious language, adding to the wonder and mystique of the place.

Minou’s exploration of these wonders made for an extraordinary adventure in the enchanted forest.

You:

Who are the three animals Minou met in the enchanted forest, and what did each teach him?

AI:

In the enchanted forest, Minou met three remarkable animals, each imparting valuable lessons:

- Wise Fox: The fox taught Minou the art of discretion, helping him understand the importance of being cautious and thoughtful in his actions.

- Agile Squirrel: This squirrel showed Minou how to climb the tallest trees, teaching him agility and the benefits of persistence and determination.

- Learned Owl: The owl shared ancient stories, providing Minou with wisdom and enriching his knowledge with timeless tales.

As night fell, Minou returned home with his heart filled with magical memories from these encounters.

You:

How did Minou feel about his adventure in the enchanted forest when he returned home?

AI:

When Minou returned home from his adventure in the enchanted forest, he felt a deep sense of wonder and fulfillment. The magical experiences and the lessons he learned from the wise fox, the agile squirrel, and the learned owl enriched his heart and mind. Reflecting on the unbelievable sights and incredible journey, Minou cherished the memories and felt grateful for the unexpected adventure that had broadened his horizons and filled him with awe.

The chatbot successfully answered a series of questions related to the story contained in the uploaded PDF document. By leveraging the RAG model, the chatbot was able to retrieve relevant information from the document and generate contextually appropriate responses to the user’s queries. The chatbot demonstrated its ability to understand and respond to a variety of questions, providing detailed and accurate answers based on the content of the PDF.

You can try out the chatbot yourself by uploading your own PDF document and asking questions related to the document. The chatbot is designed to handle a wide range of topics and queries, making it a versatile tool for extracting information from PDF documents.

Conclusion

The development of this AI-powered chatbot using Retrieval-Augmented Generation (RAG) has been a fulfilling and educational journey. By integrating state-of-the-art AI models such as GPT-3.5 turbo, GPT-4 turbo, and GPT-4o with the powerful FAISS vector store, I have created a versatile tool capable of extracting and delivering precise information from PDF documents. This project not only demonstrates the potential of RAG in enhancing user interactions but also showcases the practical application of machine learning techniques in real-world scenarios.

Throughout the project, I have gained invaluable skills in natural language processing, vector similarity search, and AI-driven query handling. These skills have empowered me to solve complex problems and continuously adapt to new technologies, solidifying my foundation in data science and AI.

The chatbot’s ability to understand and respond to a wide range of questions in a natural and conversational manner exemplifies the power of combining advanced AI models with efficient data retrieval methods. The seamless integration of these components ensures that users receive accurate and contextually relevant answers, enhancing their experience and interaction with the system.

Looking ahead, I am excited to further refine and expand the capabilities of this chatbot, exploring new ways to leverage AI for information retrieval and user engagement. This project has not only broadened my technical expertise but also fueled my passion for innovation and continuous learning in the field of data science.

For anyone interested in exploring the chatbot, you can interact with it directly on Hugging Face. I look forward to receiving feedback and ideas for future improvements, and I am eager to continue pushing the boundaries of what AI can achieve.

Thank you for following along on this journey. The future of AI and data science is incredibly promising, and I am thrilled to be a part of it.